The Rise of Quantum Computing: Revolutionizing Data Processing

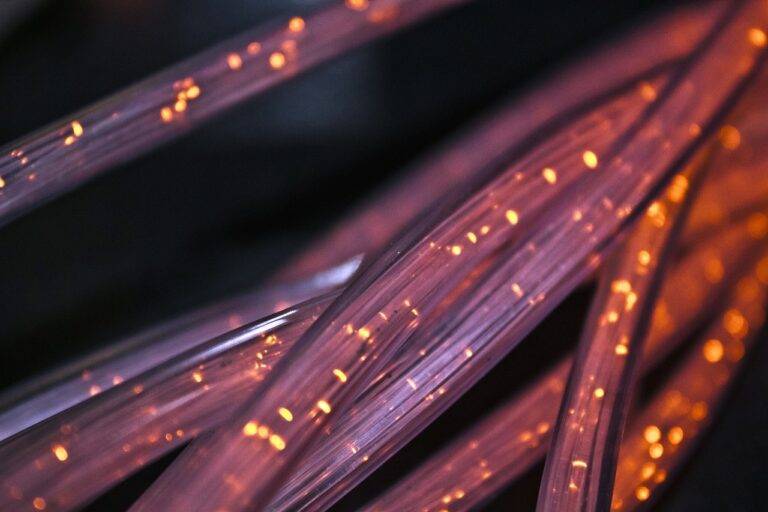

Quantum computing is a cutting-edge field that harnesses the principles of quantum mechanics to process information in ways that surpass the capabilities of classical computers. While classical computers rely on bits, which are binary units of information represented as either 0 or 1, quantum computers use quantum bits, or qubits, which can exist in multiple states simultaneously due to the phenomenon known as superposition.

One of the most intriguing features of quantum computing is entanglement, where qubits become correlated in such a way that the state of one qubit can instantly influence the state of another, regardless of the distance between them. This property allows quantum computers to perform complex calculations and solve problems at a speed and efficiency that classical computers simply cannot match. As researchers continue to explore the potential applications of quantum computing, it is clear that this technology has the power to revolutionize numerous industries and pave the way for unprecedented advancements in science and technology.

Understanding Quantum Bits (Qubits)

Quantum bits, or qubits, are the fundamental building blocks of quantum computing. Unlike classical bits, which can only exist in a state of 0 or 1, qubits can exist in a superposition of both states simultaneously. This unique property allows qubits to perform multiple calculations at once, enabling quantum computers to solve complex problems much faster than classical computers.

One of the key characteristics of qubits is their ability to be entangled with each other. Entanglement is a phenomenon where the state of one qubit is dependent on the state of another, regardless of the physical distance between them. This interconnectedness enables quantum computers to perform operations that would be impossible with classical computers, making them particularly well-suited for tasks such as optimization, cryptography, and simulating quantum systems.

Differences Between Classical and Quantum Computing

In classical computing, information is processed and stored using bits that are either a 0 or a 1. These bits form the basis of operations in classical computers, making them follow a linear sequence of instructions. Classical computers rely on logical operations like AND, OR, and NOT to perform computations and retrieve data.

On the other hand, quantum computing operates using quantum bits or qubits. Unlike classical bits, qubits can exist in multiple states simultaneously, thanks to the principles of superposition and entanglement. This property allows quantum computers to process vast amounts of information in parallel, potentially solving complex problems exponentially faster than classical computers.

What is quantum computing?

Quantum computing is a type of computing that uses quantum-mechanical phenomena, such as superposition and entanglement, to perform operations on data.

What are qubits in quantum computing?

Qubits, or quantum bits, are the fundamental units of quantum information. Unlike classical bits, which can only be in a state of 0 or 1, qubits can exist in a superposition of these states.

How do classical and quantum computing differ?

Classical computing processes information using bits that are in a state of either 0 or 1, while quantum computing uses qubits that can exist in a superposition of states. Quantum computing also takes advantage of entanglement, which allows qubits to be correlated in ways that classical bits cannot.

What are the advantages of quantum computing over classical computing?

Quantum computing has the potential to solve certain problems much faster than classical computing, especially in areas like cryptography, optimization, and simulation. Quantum computers could also enable breakthroughs in fields like drug discovery and materials science.

Are there any limitations to quantum computing?

Quantum computing is still in the early stages of development, and there are many technical challenges that need to be overcome, such as error correction and scalability. Additionally, quantum computers may not be suitable for all types of problems, as some tasks are better suited to classical computing.